Cherry-Picking Data

Science is a methodology that generates belief systems. If you believe in the scientific method, then you probably think that when science supports some belief, it is rational to believe it. In general this means that there are theories and experiments that support this belief. However, experiments sometimes come up with contradictory answers.

Here is one reason why: when collecting data, often one has to run statistics on it to know if the difference found between data collected in two conditions is worth paying attention to. When we say that a scientific finding is statistically significant, what that means is that the findings are very unlikely to be due to chance. For example, domestic cats are smaller than domestic dogs. However, there are some cats that are bigger than some dogs. You have to have a big enough sample for the statistics to say that you have a legitimate finding. It could be that, due to chance, you just happened to measure big cats and small dogs.

Significance means that it was unlikely due to chance. But it still could be due to chance-- when a scientific finding is "significant," it typically means that there is a 1% or a 5% chance that the finding is due to chance. This doesn't sound like much, but when you do 100 studies, between one and five of them will find a significant difference that just isn't there.

This is a problem plaguing fields like parapsychology. There are (probably) lots of studies out there that show that people can do telekinesis-- well-done studies that show statistical significance. However, if your threshold of significance is 5%, then one out of every 20 studies done will have a favorable result, even if telekinesis does not exist.

There is a bias in publishing, too: journals are reluctant to publish "null results," or results that are not significant.

Okay, so what does this mean?

This means that just because someone can point to a study that supports some idea does not mean that there are not many other studies that have been done, published or not, that failed to find the same result. So when people publish and cite things, they might be citing those studies that support their argument, and ignoring studies that don't. In science we call this "cherry picking data," because it's like going to the cherry tree and only taking the fruit that looks most delicious.

So how is the layperson to know whether a paper cherry picks data or not? Indeed, how should a trained scientist even know? I have never heard of a good answer to this question.

But I have a suggestion.

Let's suppose you want to argue, in a publication, that the color red is associated with violence. What you could do is do a search for scientific papers that have investigated this issue. For example, you could search a psychology database such as psychinfo for "red color association experiment violence," or some combination of keywords that returns a reasonable number of hits. Then, one can review those papers and report on whether or not they support the claim.

Granted, if people don't write like this, the reader still doesn't know if data has been cherry-picked or not. It's more of a writer's strategy than a reader's. And there are still ways to cheat this system-- you could cherry pick the search engine, you could cherry pick the keywords, etc. However, it still might instill a bit more confidence in your reader that you've done your due diligence in terms of looking at previous work. I find that when people report some evidence counter to their claims, along with the evidence supporting it, I trust the source more, and are more likely to take them seriously.

Right now there is just trust that the author is reporting all relevant studies. My method suggests making this a bit more quantitative by reporting search methodology.

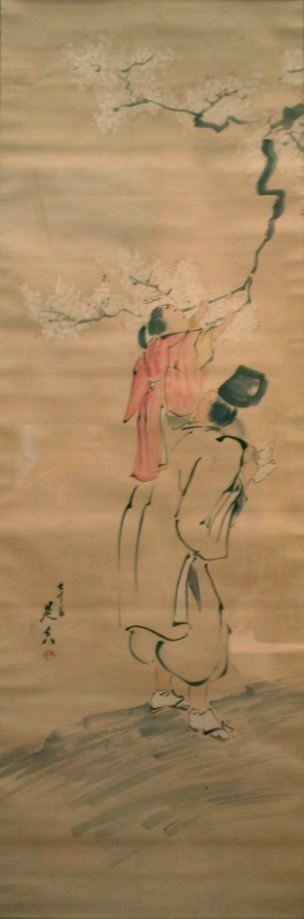

Pictured: Shibata Zeshin (1807-1891)

Boy Picking Cherry Blossoms

Japan, Meiji period (1868-1912), c. 1876

Hanging scroll; ink and color on paper

Gift of Mr. and Mrs. James E. O'Brien, 1978

Japan, Meiji period (1868-1912), c. 1876

Hanging scroll; ink and color on paper

Gift of Mr. and Mrs. James E. O'Brien, 1978

From Wikimedia Commons

Comments